How a chatbot works: A complete guide

Discover how chatbots work, from AI and NLP to automation. Learn how they process queries and streamline business communication.

get started

We ask a question and get an instant reply - friendly, precise, even a little too human. It's not a person, and it's definitely not magic. It's a chatbot, and a whole engine of language, architecture, and re-learning is working behind that interface. What's behind that curtain? Here's all about how a chatbot works.

What is a chatbot?

A Chatbot is a computer application or a program that uses Natural Language Processing (NLP) and Machine Learning (ML) to interact with and simulate a human conversation to answer queries, collect information, provide resolution, guide visitors through offerings, and more.

Now, what's the architecture underneath a chatbot?

Chatbot architecture

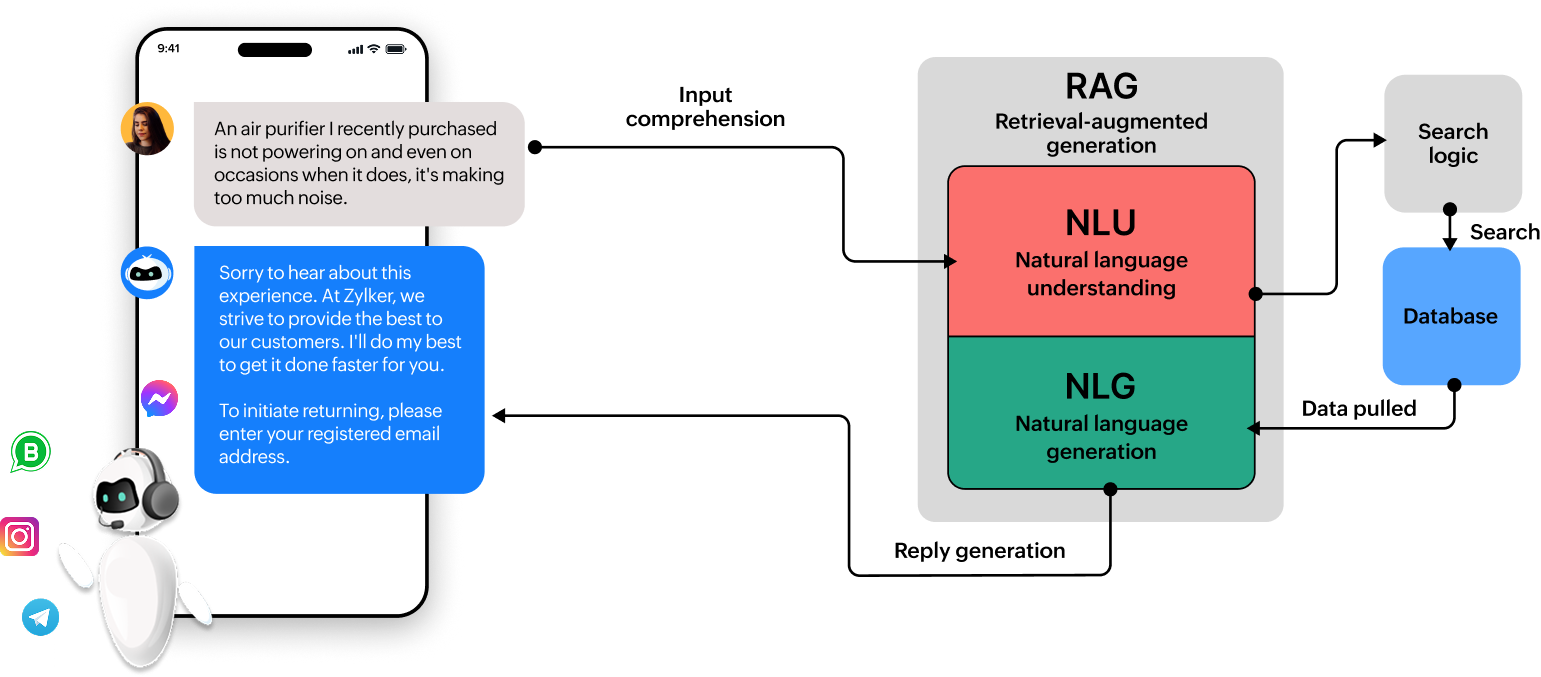

When someone initiates a conversation with chatbots, the process happens in three stages:

Stage 1: Understanding the user's input

Natural Language Understanding (NLU), a sub-element of NLP, decodes the user's input into a machine-readable format. As humans don't exactly speak in a way that machines naturally understand, NLU helps chatbots understand the user's query and its context.

The Intent Classifier, one of the two components in the NLU engine, creates a map for the following action based on what the user has asked. The second element, Entity Extractor, identifies the user's questions by identifying the inputted keywords.

Interacting with humans requires the chatbot to understand the emotion behind the queries, as this helps them give emotional value to the conversation. With sentiment analysis, the chatbot will analyze the user's tone, categorize the sentiments, and use them as a reference while drafting a reply that satisfies the visitor's emotional needs.

Stage 2: Searching the database

Chatbots aren't intelligent on their own. So, after understanding the user's query, they'll look into the attached related and relevant knowledge base resources to figure out what the user is looking for.

This is achieved via backend integrations between the chatbot and the business database.

Stage 3: Drafting and sharing replies

The information pulled out of the database that answers the user's query is compiled into a suitable response using Natural Language Generation (NLG), the other sub-element of NLP.

Retraining

There are two additional elements involved in the chatbot architecture:

1) Dialogue management unites all three stages to ensure the flow of the conversation is coherent and keeps the context relevant. The technology underneath dialog management varies depending on the type of chatbot used.

2) Every conversation cycle is constantly monitored and fed into the machine as a feedback loop to learn and refine how chatbots handle these three stages—this process is what we know as ML.

This is the crux of how chatbots work. Now let's explore the types of chatbots available in the market and how each works.

Types of chatbots

Since the inception of chatbot technology, many types of chatbots have become mainstream, and the major and still relevant among them can be combined together under the following types:

Flow chatbots

Flow chatbots are basic, pre-defined bots mainly used to collect information from users.

FAQ chatbots

FAQ chatbots use integrated resources to answer visitor queries by providing relevant information from a knowledge base.

AI chatbots

The advanced conversational AI chatbots can understand the context and emotion to generate empathetic, human-like responses beyond simple keyword matching.

Related read:

15+ essential chatbot statistics

Key dos and don’ts in chatbot development for 2025

Chatbot metrics: The complete guide to measuring engagement success

How do chatbots work?

But how do all these chatbots work? What's the technology behind these chatbots?

Flow chatbots

Flow chatbots/rule-based chatbots don't understand the context of the conversation as they work based on a set of pre-determined rules. Since the flow is already established, the architecture of flow chatbots is fairly simple and straightforward. To identify the components involved in the flow of chatbots, they can be broken down into the same three stages we discussed earlier:

Stage 1: Understanding the user's input

When a user initiates a conversation with the flow chatbot:

1) The tokenizer splits the input into readable words or phrases for the machine to understand.

2) The broken words or phrases are matched with the patterns stored in the flow chatbots using a pattern matcher.

The tokenizer and the Pattern Matcher have limited potential in rule-based flow chatbots because users will have limited scope to enter entirely new queries independently. Most of the time, options will be shared with the users, and they'll be asked to select from them. They'll be taken to a different flow based on what they choose.

Stage 2: Searching the database

Before deploying flow chatbots, a decision tree or flow-based logic will be written to define how the flow chatbot should work.

For example, If the user inputs the option "I'm yet to receive my order," the pre-defined flow will show the next option, "Can you please enter your order number," so that it can search through the orders to get the information needed by the user.

The dialogue manager doesn't have a prominent role here, as the chatbot follows pre-defined rules. The rules themselves establish the context of the conversation, and it's not dynamic.

Stage 3: Drafting and sharing replies

The flow of chatbots' replies will mostly be static, which is also determined when the rules are established. However, variables can be included to personalize it to a certain extent.

For example, when the flow chatbot asks for the user's name, the input can be stored under the variable %name%, which can be later used to personalize the conversation. When the order status is retrieved from the database, it'll send the templated response "Your order is on the way, John. If you'd like to track it, click the link below."

On the backend, it can integrate with other applications or use API calls to get the information the user asks for. After retrieving the data, it'll be shared in a templated response.

FAQ chatbots

Traditional FAQ chatbots have the additional scope of allowing users to type their query instead of just selecting the shared options. The breakdown of its architecture will look like:

Stage 1: Understanding the user's input

The input will first be cleaned and normalized from the raw text. The tokenizer and the Pattern Matcher will break down the complex format into machine-readable ones. The input will also be passed through a synonym and spell-check engine that will parse and figure out the variations and spelling errors.

It'll give the chatbot a better understanding of what the user is asking. Since the chatbot will be integrated with a large volume of business collateral, such as articles, knowledge base resources, FAQs, and more, it needs to identify what the user is asking to figure out what to share with them.

Not everyone will have the same way of phrasing their query. So, by breaking down the phrases into simpler ones and passing them through the synonym and spell-checker engine, the chatbot will be able to identify the variations to search through the database to retrieve the information the user is looking for.

Stage 2: Searching the database

Once the query is broken down, the identified keywords will be searched across the database using Keyword Matching. However, matching keywords has a downside: it marks all the documents that have the particular keyword. Even matching all the identified keywords won't guarantee a relevant answer for visitors.

To nullify this, FAQ chatbots will also do Pattern Matching, which matches the entire phrase with what's available in the database. When all phrases match, accuracy will be better than just keyword matching.

If no other entries in the database match the keywords/phrases, the system will retrieve the information and move to the next stage. But if multiple entries match the user's query, Matching Logic will be introduced to identify which resource to share.

The logic can be based on Specificity ranking (it gives precedence to more specific patterns over the generic patterns identified with the keywords), matching keyword counts (the entry with the most number of matching keywords will be given precedence), or confidence score (it'll give one point per matched keyword in the selected entries and share the one that gets the maximum points). Based on the logic used, the entry will be selected for sharing.

But what does the chatbot do when it can't figure out which one to share?

To handle such cases, the chatbot will be programmed with ambiguity matches. It'll share the most related entries identified with the users, leaving it up to them to get the answers. The bot will also have a fallback mechanism when it's not able to identify the match. It'll inform the user that it's not able to identify what they're looking for and ask them to be more specific.

Even though FAQ chatbots aren't powered by AI, they log all the conversations happening with them. In cases where they can't figure out what to share, they log those and share them with the technical team for further improvements.

Stage 3: Drafting and sharing replies

Once the answer is retrieved, the content users are looking for will be shared with them. The default formatting and rich content that needs to be shared will be pre-programmed into it, and the answer templates will be shared.

AI chatbots

One of the dynamic shifts that happened with the introduction of AI chatbots is the accuracy of the answers shared with the users and the conversational tone in interacting with them.

Stage 1: Understanding the user's input

In AI chatbots, in addition to the components we discussed above with the FAQ chatbots, the NLU comes with two more components: Intent Detection and Entity Recognition.

With Intent Detection, the chatbot tries to understand what the user is trying to achieve. To do this, modern intent detection classifiers use algorithms like logistic regression or neural networks that are trained with data.

What does Entity Recognition do here? It extracts the specific keywords from the user's inputs and uses them to refine the conversation's intent.

Stage 2: Searching the database

In searching the database, the technology that uplifts the performance and accuracy of AI chatbots compared with FAQ chatbots is Retrieval-Augmentation Generation (RAG), i.e., how the chatbot searches and retrieves information from the database for better, more accurate responses. With RAG, during the retrieval stage, an AI chatbot will first look for relevant information from the database by matching keywords or phrases. It'll retrieve the top relevant answers by doing the first level of search.

In addition, it'll also search the external knowledge source for relevant information, which makes it an open-domain QA system. When searching for information only from the added collateral, there's a chance the collateral isn't updated and contains outdated information. So, by doing an external search, RAG will have information from both attached and external sources. It'll combine them and pass them on to the next stage for processing and sharing with the users.

Because of the additional search capability, AI chatbots' answers are more accurate than traditional FAQ chatbots'.

Stage 3: Drafting and sharing replies

AI chatbots will have two options for drafting and sharing replies. Either the NLG engine will compile the retrieved information and share it with the users or pass it through the GPT engine for better and fluent responses.

How the drafting and sharing stages will be handled can be decided based on the business use case and the user's expectations. RAG also has its own memory. It stores the context of the present conversation and allows the dialogue manager to maintain it for the following exchanges.

ML will be deeply integrated into AI chatbots, as it allows users to learn from conversation history and gain contextual intelligence.

Build chatbots with Zoho SalesIQ

Zoho SalesIQ is a chatbot builder that allows you to build any chatbot you want for your business.

SalesIQ's no-code platform allows users to build rule-based chatbots by dragging and dropping the bot blocks to collect visitor information and answer basic queries.

With Answer Bot, SalesIQ's FAQ chatbot, resources, articles, and knowledge bases can be integrated to answer visitor queries. If the bot can't resolve them, they can be redirected to the available human agents for resolution.

If you'd like the functionalities of both the rule-based and FAQ chatbots, SalesIQ's Answer Bot Card is a hybrid bot that can be built to combine both.

SalesIQ also integrates with OpenAI's ChatGPT if you want to add AI. It can be integrated with the no-code builder and the Answer Bot based on the needs of your business.

If you'd like to see SalesIQ in action, you can sign up for a 15-day trial.

Not sure how chatbots can be used for your business? Check out our article on chatbot use cases for 16 departments and industries.FAQs on how a chatbot works

What happens when a chatbot doesn’t understand a question?

When a chatbot doesn't understand a question, irrespective of the type of technology that enables it, a fallback mechanism will be looped into the conversation's flow. The bot will try to understand the user's query better, and if it's still not able to provide a resolution, the query will be transferred to the available human agent.

How does a chatbot integrate with my CRM, help desk, and other business applications?

What’s the difference between a chatbot and a virtual assistant?

While chatbots, typically with a chat-based interface, use rule-based or decision trees to collect information or answer user queries, virtual assistants use technology in the form of voice or text to handle personalized requests and broader functions like completing tasks and even controlling smart-home devices.

Read more about AI chatbots vs virtual assistants.